|

[CCS'25]

Towards Real-Time Defense against Object-Based LiDAR Attacks in Autonomous Driving

Yan Zhang, Zihao Liu, Yi Zhu, and Chenglin Miao.

2025 ACM SIGSAC Conference on Computer and Communications Security.

[PDF]

|

|

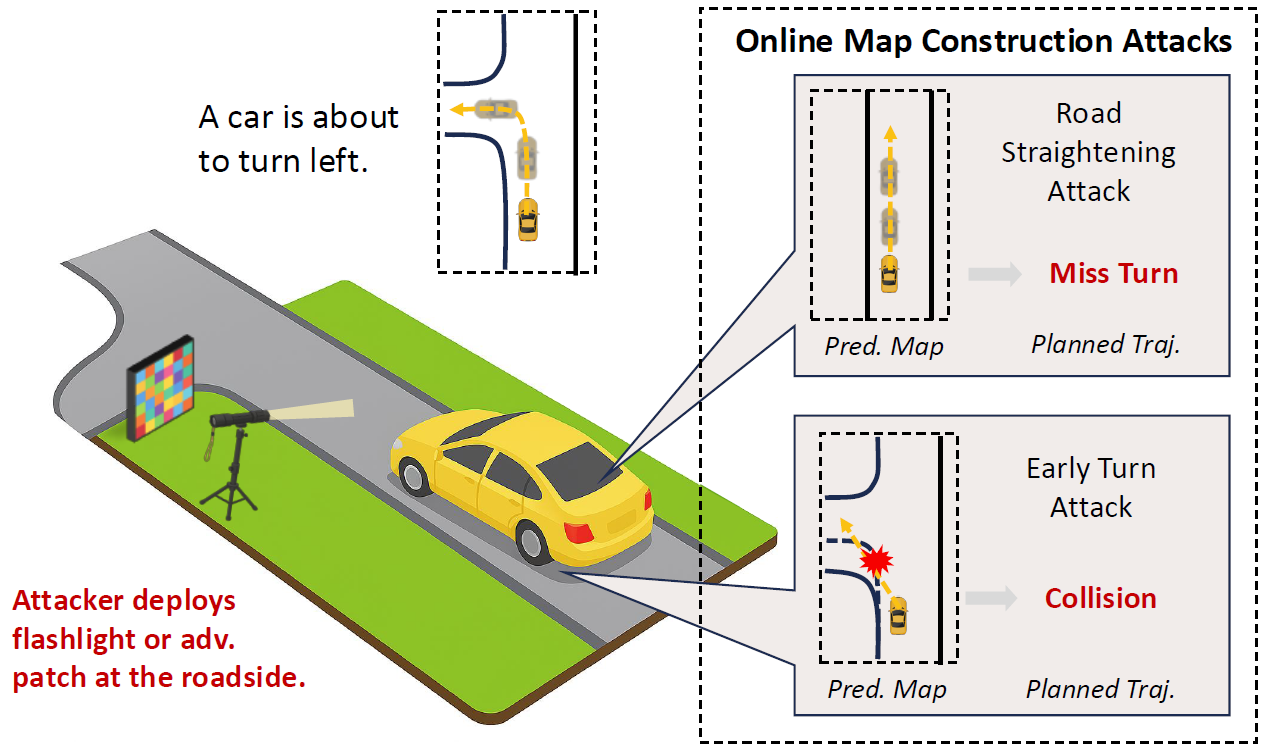

[CCS'25]

Asymmetry Vulnerability and Physical Attacks on Online Map Construction for Autonomous Driving

Yang Lou, Haibo Hu, Qun Song, Qian Xu, Yi Zhu, Rui Tan, Wei-Bin Lee, and Jianping Wang.

2025 ACM SIGSAC Conference on Computer and Communications Security.

[PDF]

|

|

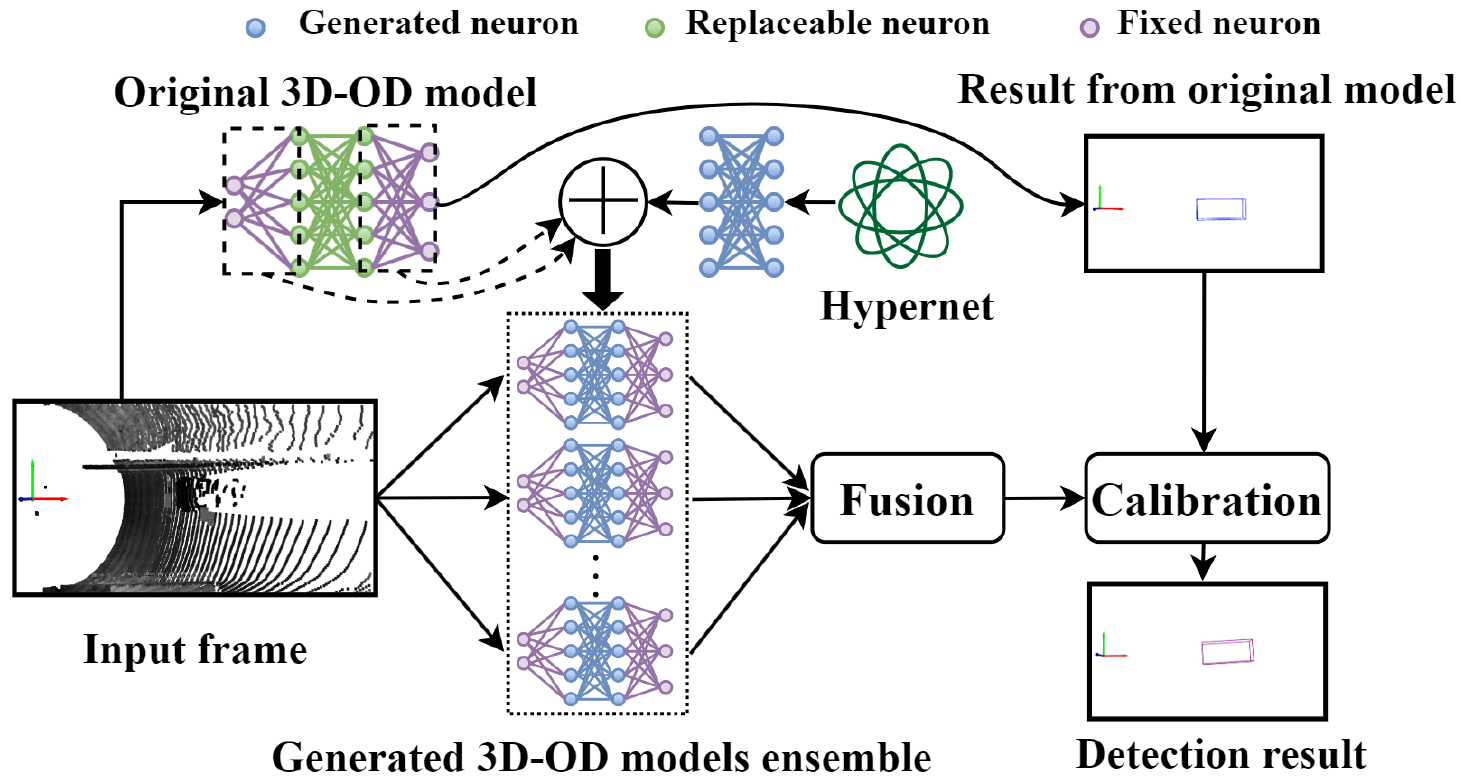

[MobiSys'25]

Dynamic Defense against Adversarial Attacks on Car-Borne LiDAR-Based Object Detection

Yihan Xu, Dongfang Guo, Qun Song, Yang Lou, Yi Zhu, Jianping Wang, Chunming Qiao, and Rui Tan.

23rd ACM International Conference on Mobile Systems, Applications, and Services.

[PDF]

|

|

|

[BigData'24]

Towards Robust mmWave-based Human Activity Recognition using Large Simulated Dataset for Model Pretraining

Vinay Joshi (Undergraduate Student), Shengkai Xu, Qiming Cao, Yi Zhu, Pu Wang, and Hongfei Xue.

2024 IEEE International Conference on Big Data.

[PDF]

|

|

[SenSys'24]

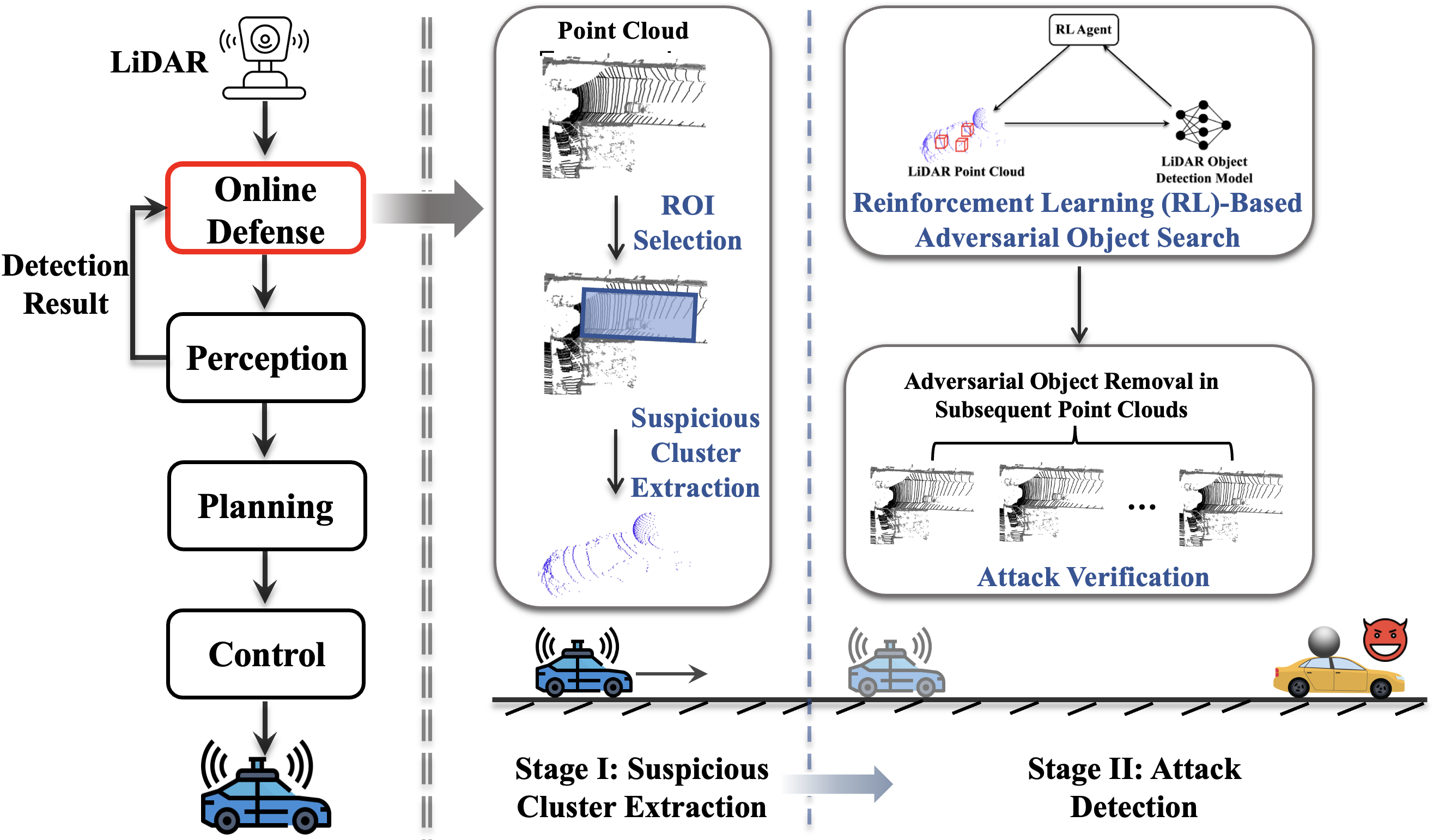

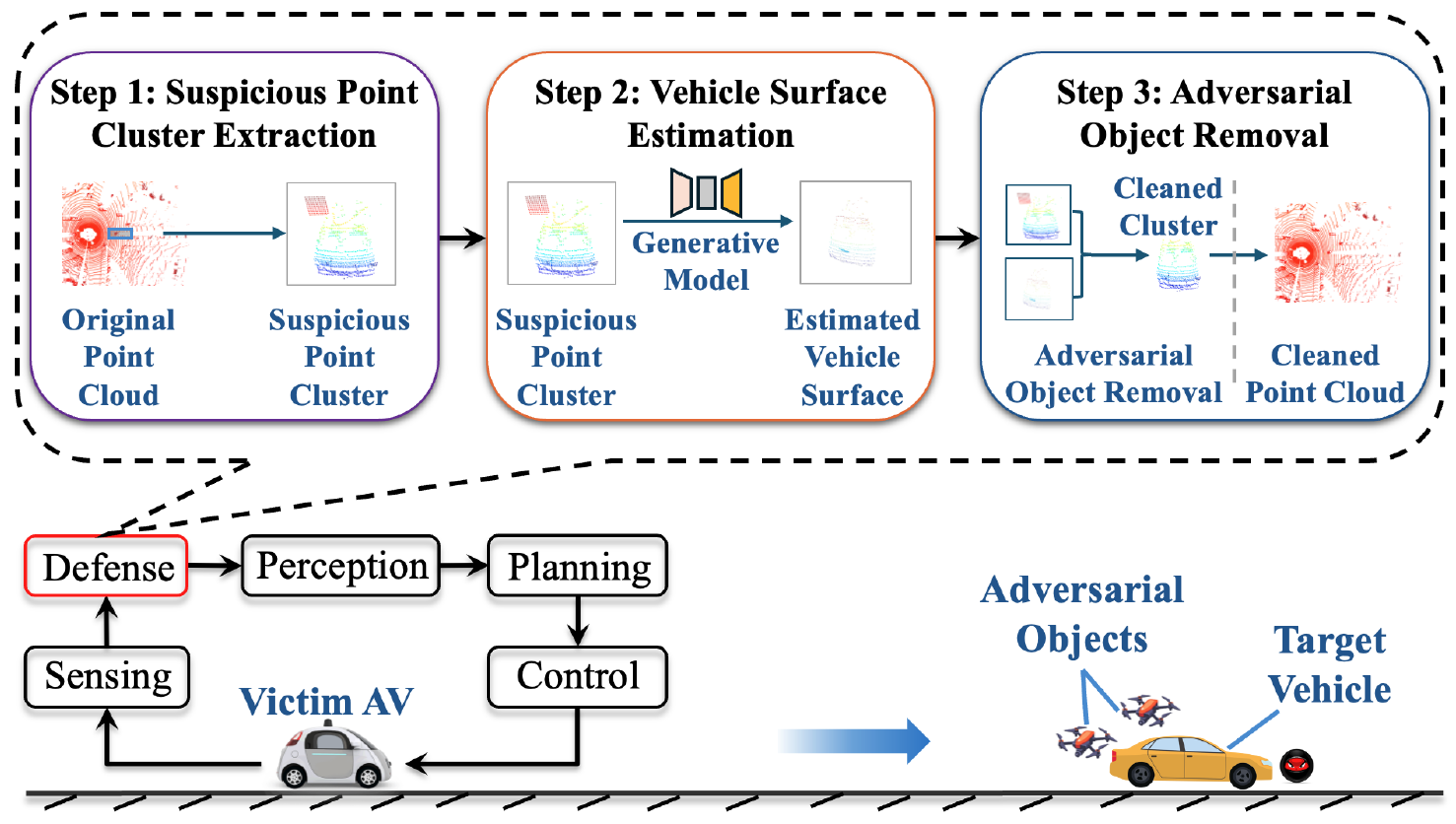

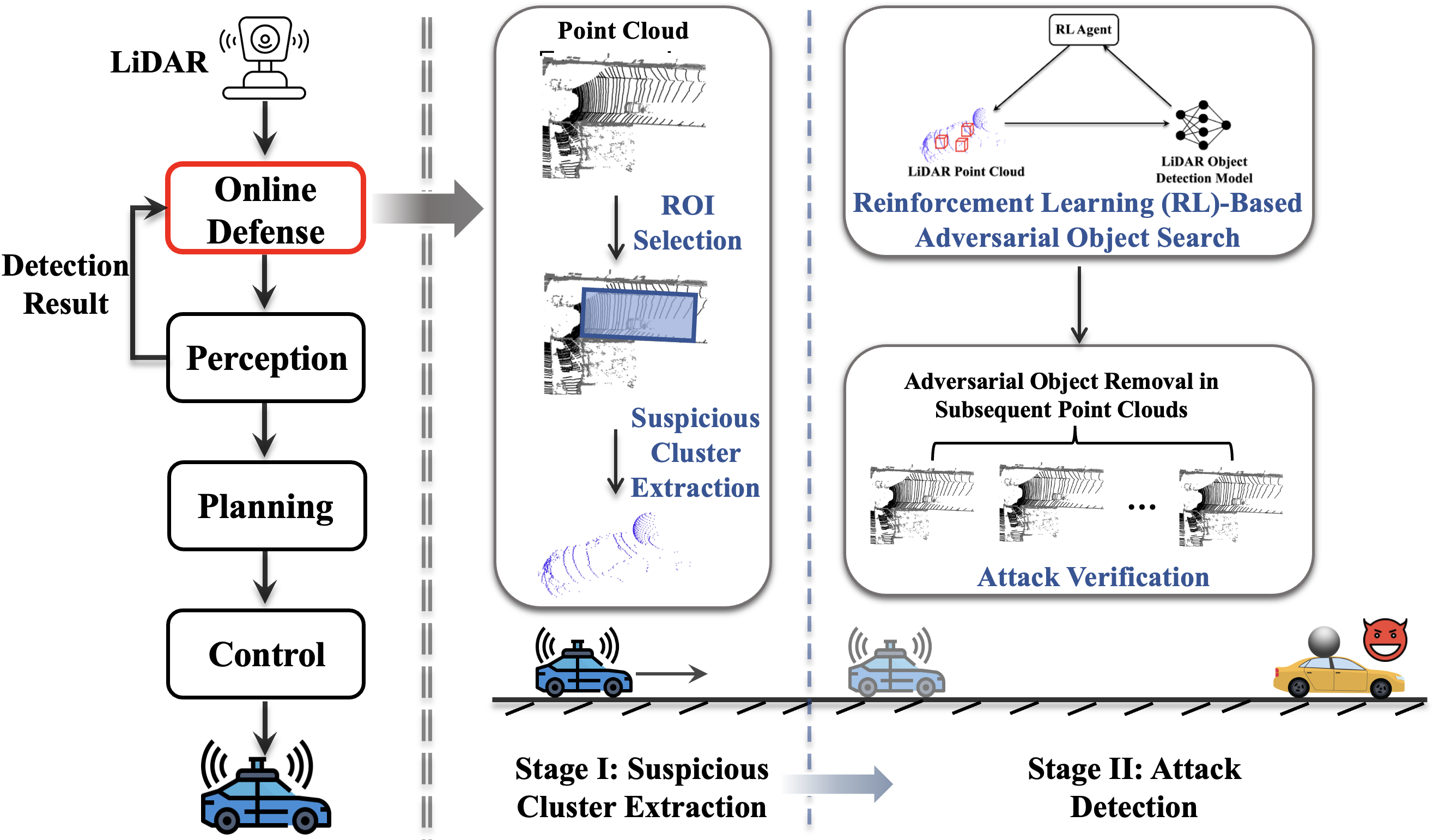

An Online Defense against Object-Based LiDAR Attacks in Autonomous Driving

Yan Zhang*, Zihao Liu*, Chongliu Jia, Yi Zhu, Chenglin Miao.

The 22nd ACM Conference on Embedded Networked Sensor Systems.

[Abstract]

[PDF]

LiDAR (Light Detection and Ranging) has been widely used in autonomous driving to perceive the surrounding environment of self-driving cars. Advanced LiDAR perception systems typically leverage deep neural networks(DNNs)toachievehighperformance. However, the vulnerability of DNNs to malicious attacks provides attackers with the means to compromise the LiDAR perception system, potentially causing traffic accidents. Recently, object-based attacks against LiDAR perception systems have drawn significant attention. In such attacks, the attacker can easily fool the LiDAR perception system by placing physical objects within the driving environment. Despite the practicality of these attacks and their potential catastrophic consequences in autonomous driving, there is currently no effective and practical defense against them. To address this issue, we propose a novel online defense mechanism against object-based LiDAR attacks. This mechanism operates in an online manner, aiming to identify and remove the adversarial LiDAR points generated by the objects used by attackers before the data is fed into the perception module of autonomous driving systems. It is not only effective and efficient for real-world autonomous driving but also attack-agnostic and capable of identifying adversarial objects used by attackers. Extensive experiments in both simulated environments and real-world scenarios using a LiDAR perception testbed demonstrate the effectiveness and practicability of the proposed defense.

|

|

[USENIX Security'24]

A First Physical-World Trajectory Prediction Attack via LiDAR-induced Deceptions in Autonomous Driving

Yang Lou*, Yi Zhu* (equal contribution), Qun Song*, Rui Tan, Chunming Qiao, Wei-Bin Lee, and Jianping Wang.

The 33rd USENIX Security Symposium.

[Abstract]

[PDF]

[Video]

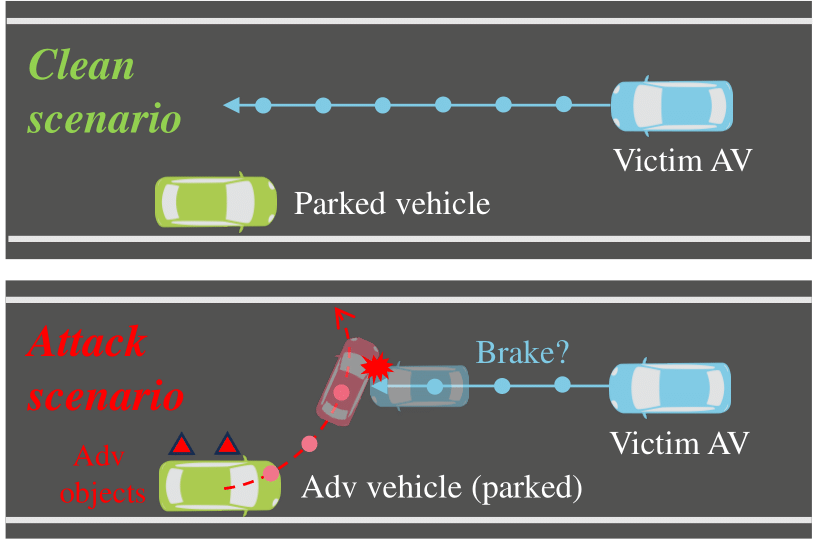

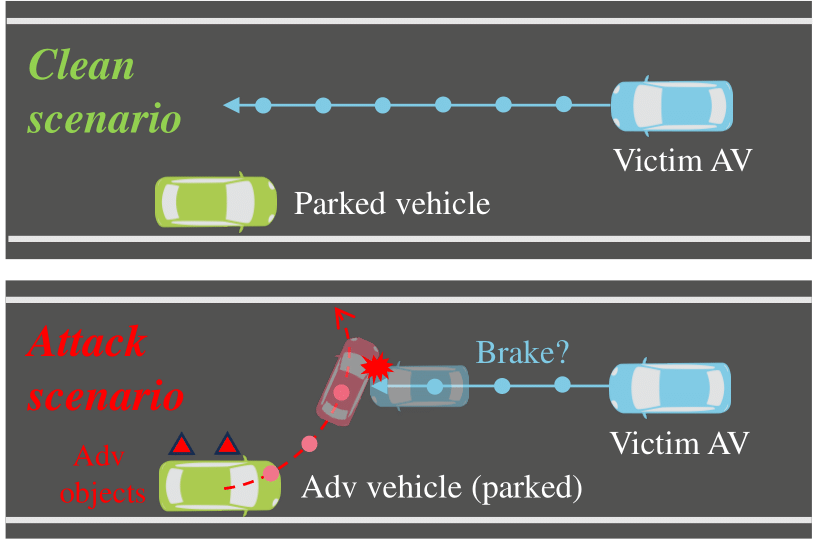

Trajectory prediction forecasts nearby agents’ moves based on their historical trajectories. Accurate trajectory prediction (or prediction in short) is crucial for autonomous vehicles (AVs). Existing attacks compromise the prediction model of a victim AV by directly manipulating the historical trajectory of an attacker AV, which has limited real-world applicability. This paper, for the first time, explores an indirect attack approach that induces prediction errors via attacks against the perception module of a victim AV. Although it has been shown that physically realizable attacks against LiDAR-based perception are possible by placing a few objects at strategic locations, it is still an open challenge to find an object location from the vast search space in order to launch effective attacks against prediction under varying victim AV velocities.

Through analysis, we observe that a prediction model is prone to an attack focusing on a single point in the scene. Consequently, we propose a novel two-stage attack framework to realize the single-point attack. The first stage of predictionside attack efficiently identifies, guided by the distribution of detection results under object-based attacks against perception, the state perturbations for the prediction model that are effective and velocity-insensitive. In the second stage of location matching, we match the feasible object locations with the found state perturbations. Our evaluation using a public autonomous driving dataset shows that our attack causes a collision rate of up to 63% and various hazardous responses of the victim AV. The effectiveness of our attack is also demonstrated on a real testbed car 1. To the best of our knowledge, this study is the first security analysis spanning from LiDARbased perception to prediction in autonomous driving, leading to a realistic attack on prediction. To counteract the proposed attack, potential defenses are discussed.

|

|

[MobiCom'24]

Malicious Attacks against Multi-Sensor Fusion in Autonomous Driving

Yi Zhu, Chenglin Miao, Hongfei Xue, Yunnan Yu, Lu Su, and Chunming Qiao.

The 30th Annual International Conference on Mobile Computing and Networking.

[Abstract]

[PDF]

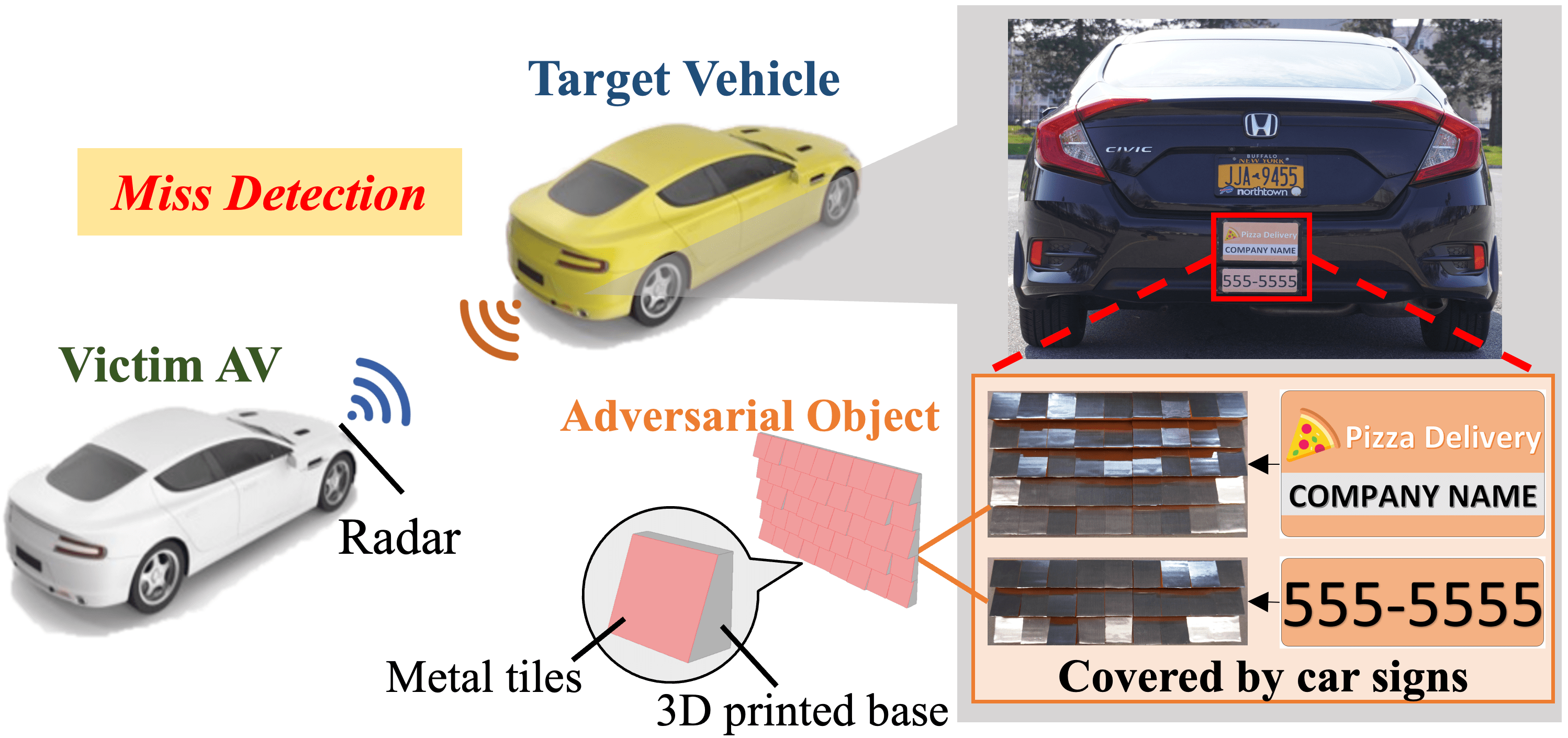

Multi-sensor fusion has been widely used by autonomous vehicles (AVs) to integrate the perception results from different sensing modalities including LiDAR, camera and radar. Despite the rapid development of multi-sensor fusion systems in autonomous driving, their vulnerability to malicious attacks have not been well studied. Although some prior works have studied the attacks against the perception systems of AVs, they only consider a single sensing modality or a camera-LiDAR fusion system, which can not attack the sensor fusion system based on LiDAR, camera, and radar. To fill this research gap, in this paper, we present the first study on the vulnerability of multi-sensor fusion systems that employ LiDAR, camera, and radar. Specifically, we propose a novel attack method that can simultaneously attack all three types of sensing modalities using a single type of adversarial object. The adversarial object can be easily fabricated at low cost, and the proposed attack can be easily performed with high stealthiness and flexibility in practice. Extensive experiments based on a real-world AV testbed show that the proposed attack can continuously hide a target vehicle from the perception system of a victim AV using only two small adversarial objects.

|

|

[CCS'23]

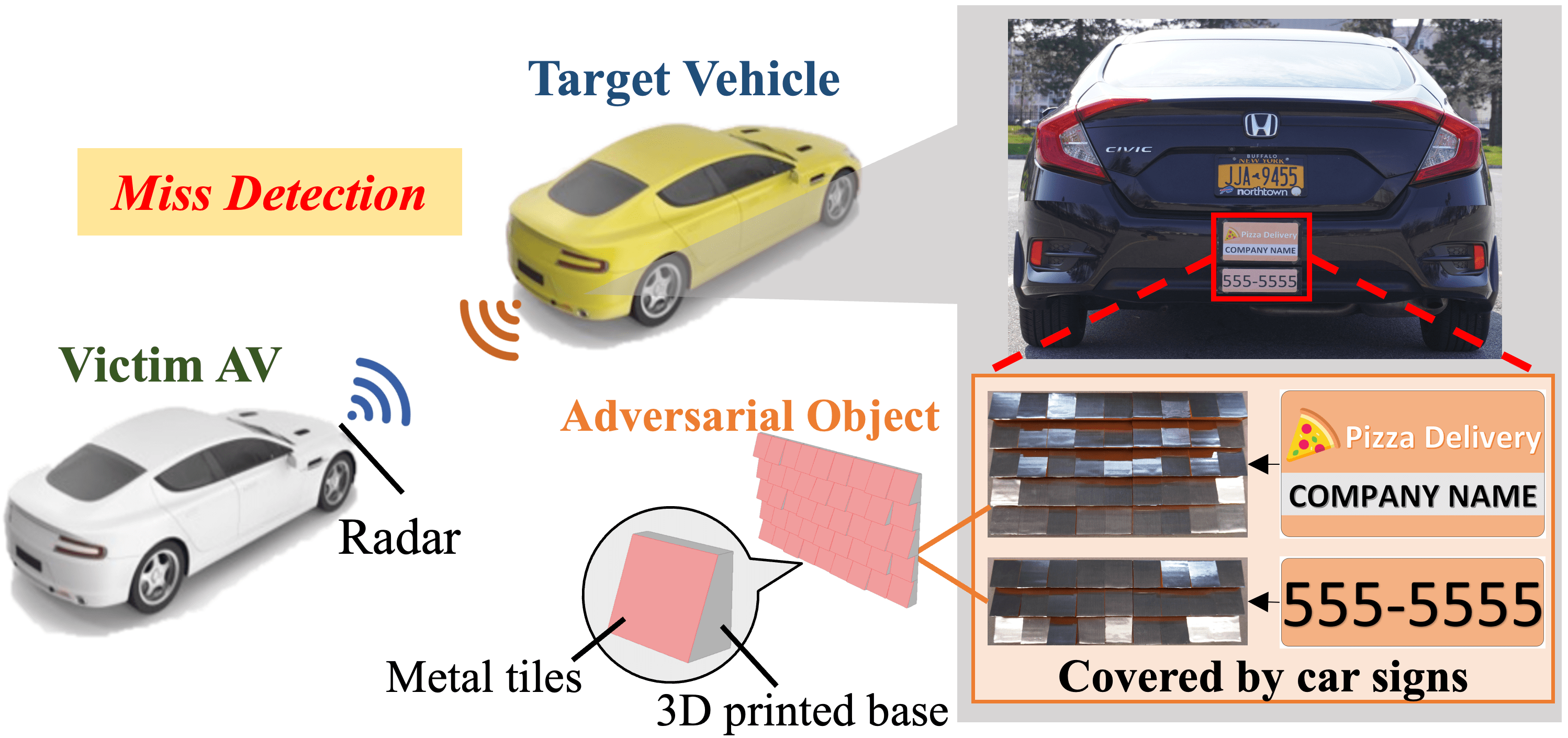

TileMask: A Passive-Reflection-based Attack against mmWave Radar Object Detection in Autonomous Driving

Yi Zhu, Chenglin Miao, Hongfei Xue, Zhengxiong Li, Yunnan Yu, Wenyao Xu, Lu Su, and Chunming Qiao.

2023 ACM SIGSAC Conference on Computer and Communications Security. (Accept Rate: 19.87%)

[Abstract]

[PDF]

[Video]

In autonomous driving, millimeter wave (mmWave) radar has been widely adopted for object detection because of its robustness and reliability under various weather and lighting conditions. For radar object detection, deep neural networks (DNNs) are becoming increasingly important because they are more robust and accurate, and can provide rich semantic information about the detected objects, which is critical for autonomous vehicles (AVs) to make decisions. However, recent studies have shown that DNNs are vulnerable to adversarial attacks. Despite the rapid development of DNN-based radar object detection models, there have been no studies on their vulnerability to adversarial attacks. Although some spoofing attack methods are proposed to attack the radar sensor by actively transmitting specific signals using some special devices, these attacks require sub-nanosecond-level synchronization between the devices and the radar and are very costly, which limits their practicability in real world. In addition, these attack methods can not effectively attack DNN-based radar object detection. To address the above problems, in this paper, we investigate the possibility of using a few adversarial objects to attack the DNN-based radar object detection models through passive reflection. These objects can be easily fabricated using 3D printing and metal foils at low cost. By placing these adversarial objects at some specific locations on a target vehicle, we can easily fool the victim AV's radar object detection model. The experimental results demonstrate that the attacker can achieve the attack goal by using only two adversarial objects and conceal them as car signs, which have good stealthiness and flexibility. To the best of our knowledge, this is the first study on the passive-reflection-based attacks against the DNN-based radar object detection models using low-cost, readily-available and easily concealable geometric shaped objects.

|

|

[NDSS'23]

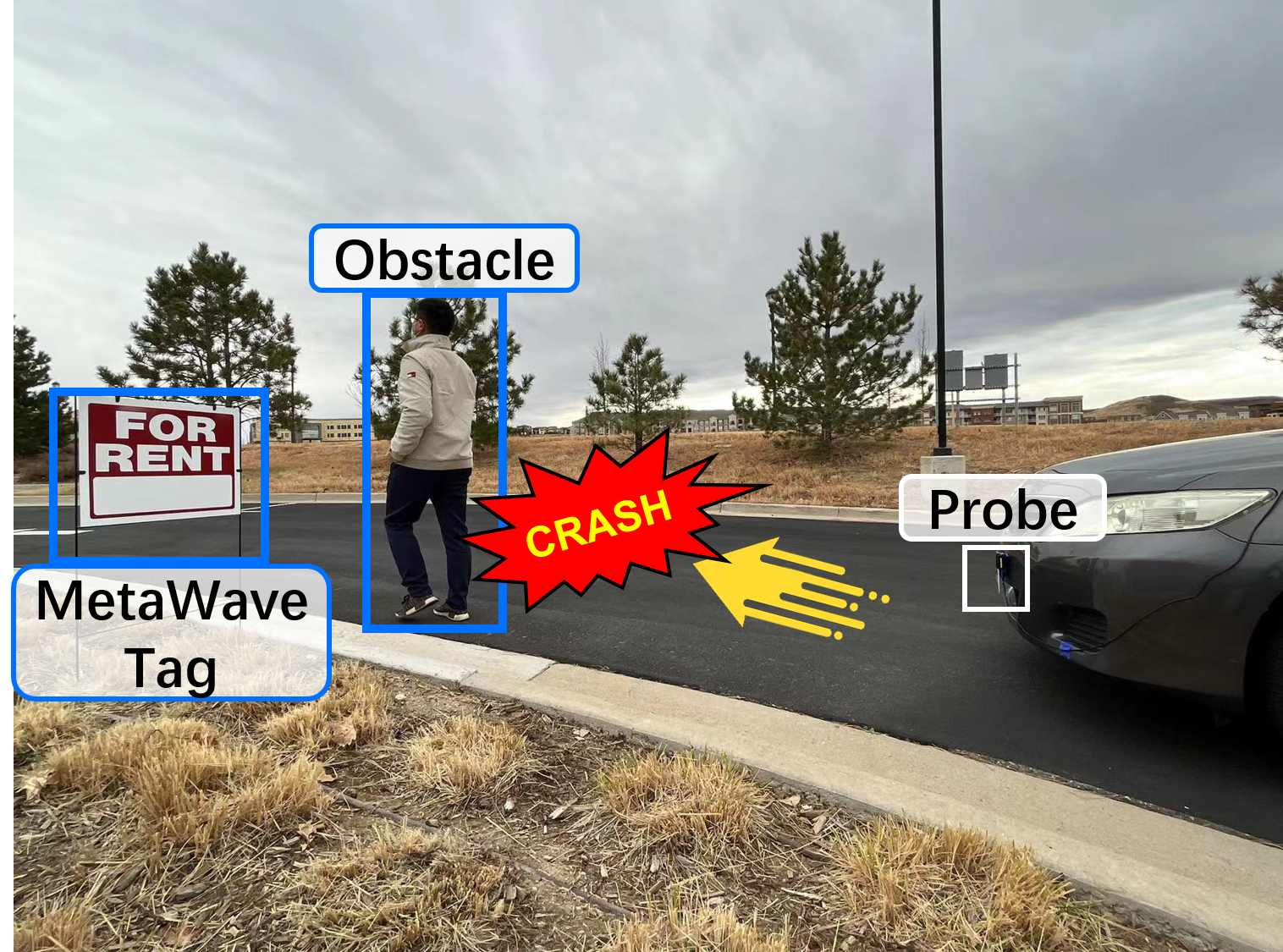

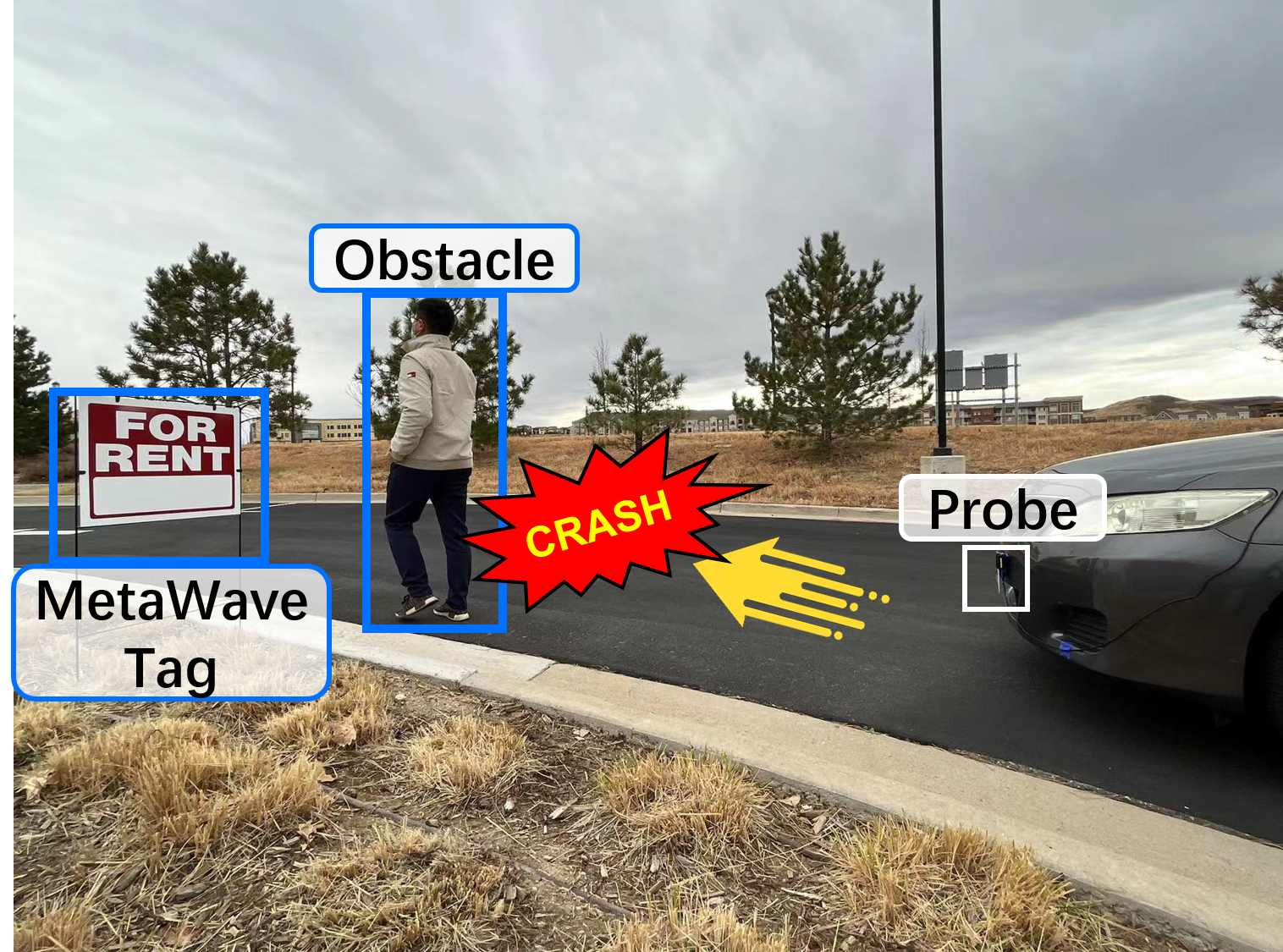

MetaWave: Attacking mmWave Sensing with Meta-material-enhanced Tags

Xingyu Chen, Zhengxiong Li, Baicheng Chen, Yi Zhu, Chris Xiaoxuan Lu, Zhengyu Peng, Feng Lin, Wenyao Xu, Kui Ren, and Chunming Qiao.

2023 Network and Distributed System Security (NDSS) Symposium.

[Abstract]

[PDF]

Millimeter-wave (mmWave) sensing has been applied in many critical applications, serving millions of thousands of people around the world. However, it is vulnerable to attacks in the real world. These attacks are based on expensive and professional radio frequency (RF) modulator-based instruments and can be prevented by conventional practice (e.g., RF fingerprint). In this paper, we propose and design a novel passive mmWave attack, called MetaWave, with low-cost and easily obtainable meta-material tags for both vanish and ghost attack types. These meta-material tags are made of commercial off-the-shelf (COTS) materials with customized tag designs to attack various goals, which considerably low the attack bar on mmWave sensing. Specifically, we demonstrate that tags made of ordinal material (e.g., C-RAM LF) can be leveraged to precisely tamper the mmWave echo signal and spoof the range, angle, and speed sensing measurements. Besides, to optimize the attack, a general simulator-based MetaWave attack framework is proposed and designed to simulate the tag modulation effects on the mmWave signal with advanced tag and scene parameters. We evaluate, MetaWave, the meta-material tag attack in both simulation and real-world experiments (i.e., 20 different environments) with various attack settings. Experimental results demonstrate that MetaWave can achieve up to 97% Top-1 attack accuracy on range estimation, 96% on angle estimation, and 91% on speed estimation in actual practice, 10-100X cheaper than existing mmWave attack methods. We also evaluate the usability and robustness of MetaWave under different real-world scenarios. Moreover, we conduct in-depth analysis and discussion on countermeasures for MetaWave mmWave attacks to improve wireless sensing and cyber-infrastructure security.

|

|

[SenSys'22]

Towards Backdoor Attacks against LiDAR Object Detection in Autonomous Driving

Yan Zhang*, Yi Zhu* (equal contribution), Zihao Liu, Chenglin Miao, Foad Hajiaghajani, Lu Su, and Chunming Qiao.

2022 ACM Conference on Embedded Networked Sensor Systems.

[Abstract]

[PDF]

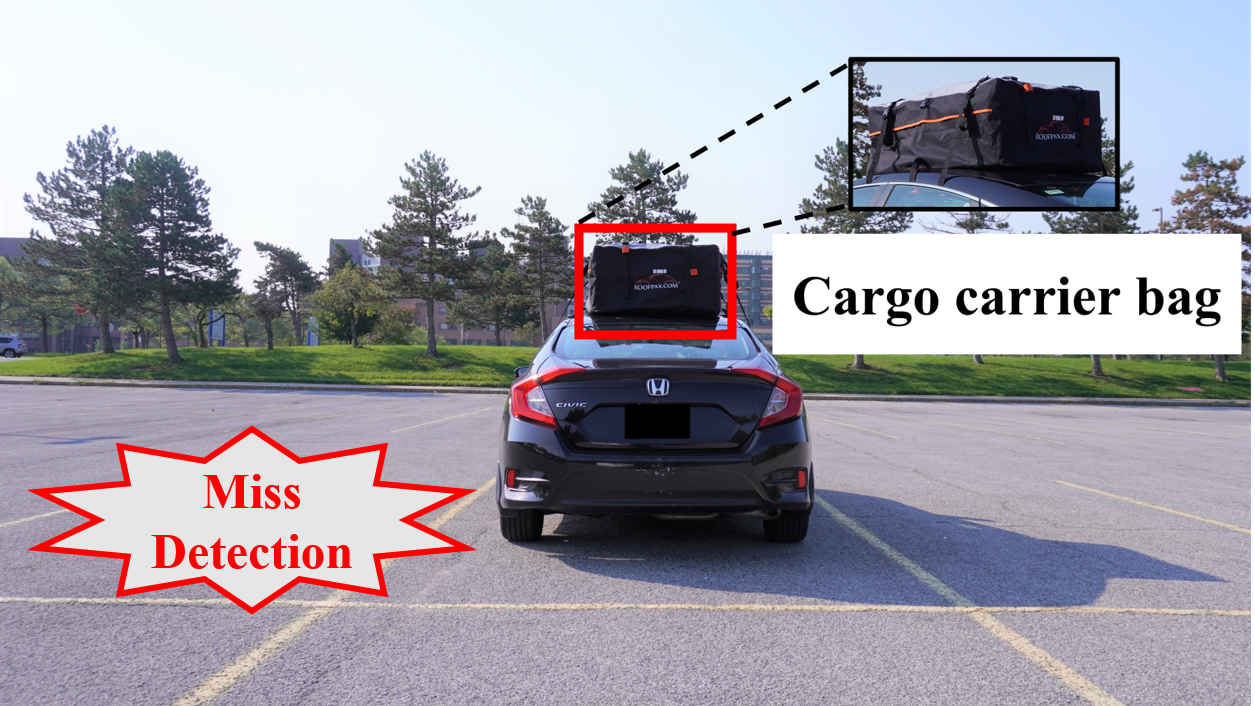

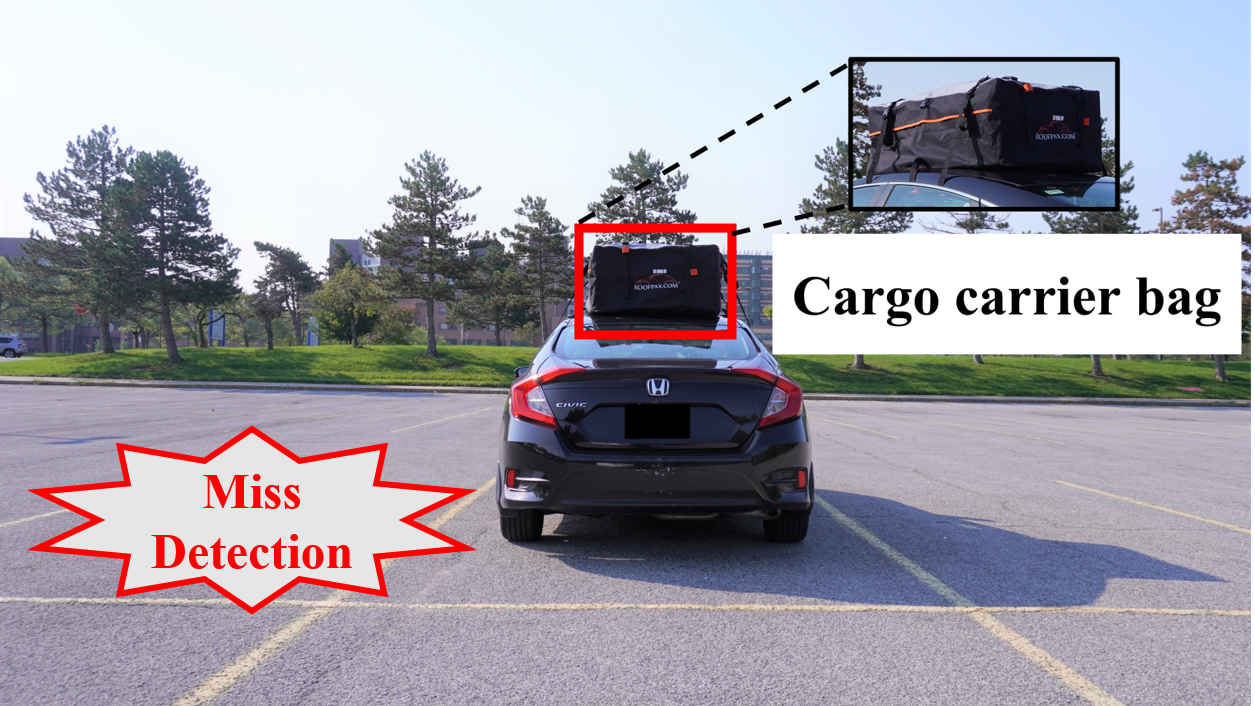

Due to the great advantage of LiDAR sensors in perceiving complex driving environments, LiDAR-based 3D object detection has recently drawn significant attention in autonomous driving. Although many advanced LiDAR object detection models have been developed, their designs are mainly based on deep learning approaches, which are usually data-hungry and expensive to train. Thus, it is common for some LiDAR perception system developers or self-driving car companies to collect training data from different sources (e.g., self-driving car users) or outsource the training work to a third party. However, these practices provide opportunities for backdoor attacks, where the attacker aims to inject a hidden trigger pattern into the victim detection model by poisoning its training set and let the model fail to detect objects when the trigger presents in the inference phase. Although backdoor attacks have posed serious security concerns, the vulnerability of LiDAR object detection to such attacks has not yet been studied. To fill the research gap, in this paper, we present the first study on backdoor attacks against LiDAR object detection in autonomous driving. Specifically, we propose a novel backdoor attack strategy based on which the attacker can achieve the attack goal by poisoning a small number of point cloud samples. In addition, the proposed attack strategy is physically realizable, and it allows the attacker to easily perform the attack using some common objects as the triggers. To make the poisoned samples difficult to be detected, we also design a stealthy attack strategy by creating some fake vehicle point clusters to hide the injected points in the point cloud. The desirable performance of our attacks is demonstrated through both simulation and real-world case study.

|

|

[AutoSec'22]

Attacking LiDAR Semantic Segmentation in Autonomous Driving

Yi Zhu, Chenglin Miao, Foad Hajiaghajani, Mengdi Huai, Lu Su, and Chunming Qiao.

2022 NDSS Workshop on Automotive & Autonomous Vehicle Security.

[PDF]

|

|

|

[AAAI Symp.]

Addressing Vulnerability of Sensors for Autonomous Driving

Yi Zhu, Foad Hajiaghajani, Changzhi Li, Lu Su, Wenyao Xu, Zhi Sun, and Chunming Qiao.

AAAI 2022 Spring Symposium.

[PDF]

|

|

[SenSys'21]

Adversarial Attacks against LiDAR Semantic Segmentation in Autonomous Driving

Yi Zhu, Chenglin Miao, Foad Hajiaghajani, Mengdi Huai, Lu Su, and Chunming Qiao.

2021 ACM Conference on Embedded NetworkedSensor Systems. (Accept Rate: 17.9%)

[Abstract]

[PDF]

[Video]

Today, most autonomous vehicles (AVs) rely on LiDAR (Light Detection and Ranging) perception to acquire accurate information about their immediate surroundings. In LiDAR-based perception systems, semantic segmentation plays a critical role as it can divide LiDAR point clouds into meaningful regions according to human perception and provide AVs with semantic understanding of the driving environments. However, an implicit assumption for existing semantic segmentation models is that they are performed in a reliable and secure environment, which may not be true in practice. In this paper, we investigate adversarial attacks against LiDAR semantic segmentation in autonomous driving. Specifically, we propose a novel adversarial attack framework based on which the attacker can easily fool LiDAR semantic segmentation by placing some simple objects (e.g., cardboard and road signs) at some locations in the physical space. We conduct extensive real-world experiments to evaluate the performance of our proposed attack framework. The experimental results show that our attack can achieve more than 90% success rate in real-world driving environments. To the best of our knowledge, this is the first study on physically realizable adversarial attacks against LiDAR point cloud semantic segmentation with real-world evaluations.

|

|

[CCS'21]

Can We Use Arbitrary Objects to Attack LiDAR Perception in Autonomous Driving?

Yi Zhu, Chenglin Miao, Tianhang Zheng, Foad Hajiaghajani, Lu Su, and Chunming Qiao.

2021 ACM SIGSAC Conference on Computer and Communications Security. (Accept Rate: 22.3%)

[Abstract]

[PDF]

[Video]

As an effective way to acquire accurate information about the driving environment, LiDAR perception has been widely adopted in autonomous driving. The state-of-the-art LiDAR perception systems mainly rely on deep neural networks (DNNs) to achieve good performance. However, DNNs have been demonstrated vulnerable to adversarial attacks. Although there are a few works that study adversarial attacks against LiDAR perception systems, these attacks have some limitations in feasibility, flexibility, and stealthiness when being performed in real-world scenarios. In this paper, we investigate an easier way to perform effective adversarial attacks with high flexibility and good stealthiness against LiDAR perception in autonomous driving. Specifically, we propose a novel attack framework based on which the attacker can identify a few adversarial locations in the physical space. By placing arbitrary objects with reflective surface around these locations, the attacker can easily fool the LiDAR perception systems. Extensive experiments are conducted to evaluate the performance of the proposed attack, and the results show that our proposed attack can achieve more than 90% success rate. In addition, our real-world study demonstrates that the proposed attack can be easily performed using only two commercial drones. To the best of our knowledge, this paper presents the first study on the effect of adversarial locations on LiDAR perception models’ behaviors, the first investigation on how to attack LiDAR perception systems using arbitrary objects with reflective surface, and the first attack against LiDAR perception systems using commercial drones in physical world. Potential defense strategies are also discussed to mitigate the proposed attacks.

|

|

[TOSN]

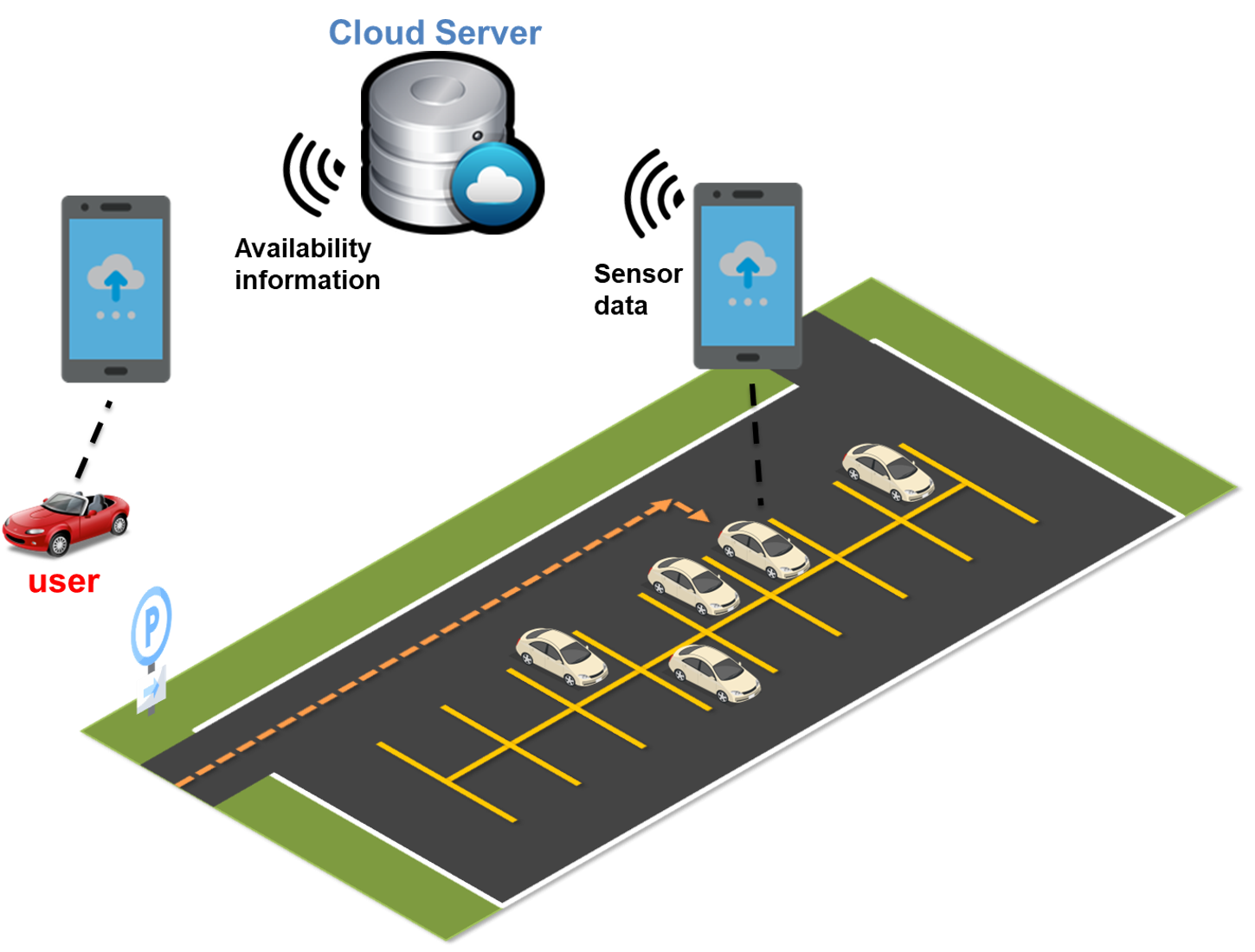

Driver Behavior-aware Parking Availability Crowdsensing System Using Truth Discovery

Yi Zhu, Abhishek Gupta, Shaohan Hu, Weida Zhong, Lu Su, and Chunming Qiao.

ACM Transactions on Sensor Networks.

[Abstract]

[PDF]

Spot-level parking availability information (the availability of each spot in a parking lot) is in great demand, as it can help reduce time and energy waste while searching for a parking spot. In this article, we propose a crowdsensing system called SpotE that can provide spot-level availability in a parking lot using drivers’ smartphone sensors. SpotE only requires the sensor data from drivers’ smartphones, which avoids the high cost of installing additional sensors and enables large-scale outdoor deployment. We propose a new model that can use the parking search trajectory and final destination (e.g., an exit of the parking lot) of a single driver in a parking lot to generate the probability profile that contains the probability of each spot being occupied in a parking lot. To deal with conflicting estimation results generated from different drivers, due to the variance in different drivers’ parking behaviors, a novel aggregation approach SpotE-TD is proposed. The proposed aggregation method is based on truth discovery techniques and can handle the variety in Quality of Information of different vehicles. We evaluate our proposed method through a real-life deployment study. Results show that SpotE-TD can efficiently provide spot-level parking availability information with a 20% higher accuracy than the state-of-the-art.

|